This ensures efficient utilization of bandwidth on both ends.

Incremental Data Load: Hevo allows the transfer of data that has been modified in real-time.Hevo Is Built To Scale: As the number of sources and the volume of your data grows, Hevo scales horizontally, handling millions of records per minute with very little latency.

#Dag airflow free#

It supports 100+ data sources ( including 30+ free data sources) like Asana and is a 3-step process by just selecting the data source, providing valid credentials, and choosing the destination. Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDKs, and Streaming Services and simplifies the ETL process. The airflow is ready to continue expanding indefinitely.

#Dag airflow code#

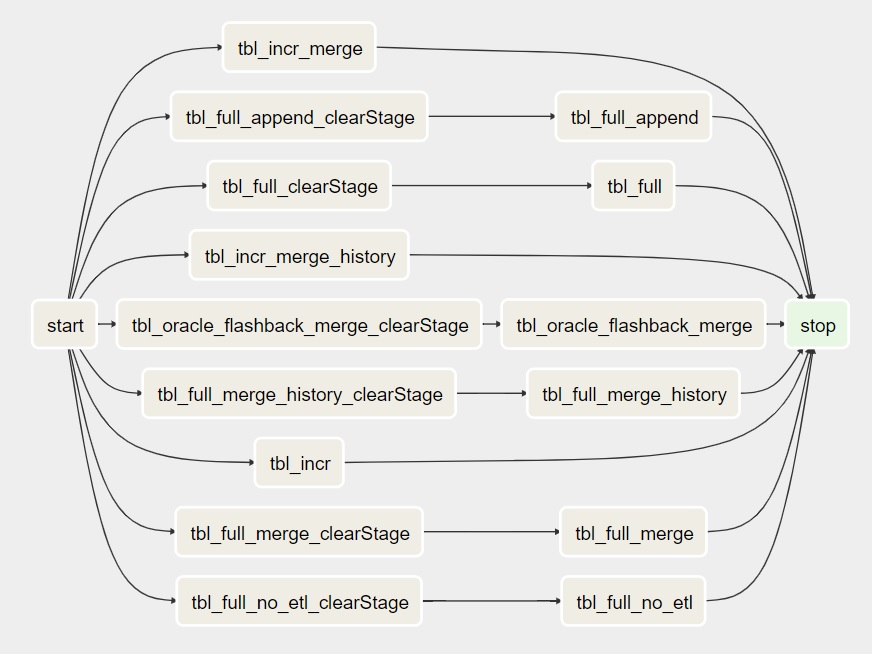

This allows for the development of code that dynamically instantiates pipelines. Dynamic: Airflow pipelines are written in Python and can be generated dynamically.Apache Airflow, like a spider in a web, sits at the heart of your data processes, coordinating work across multiple distributed systems. This feature can also be used to recompute any dataset after modifying the code. It also includes a slew of building blocks that enable users to connect the various technologies found in today’s technological landscapes.Īnother useful feature of Apache Airflow is its backfilling capability, which allows users to easily reprocess previously processed data.

#Dag airflow software#

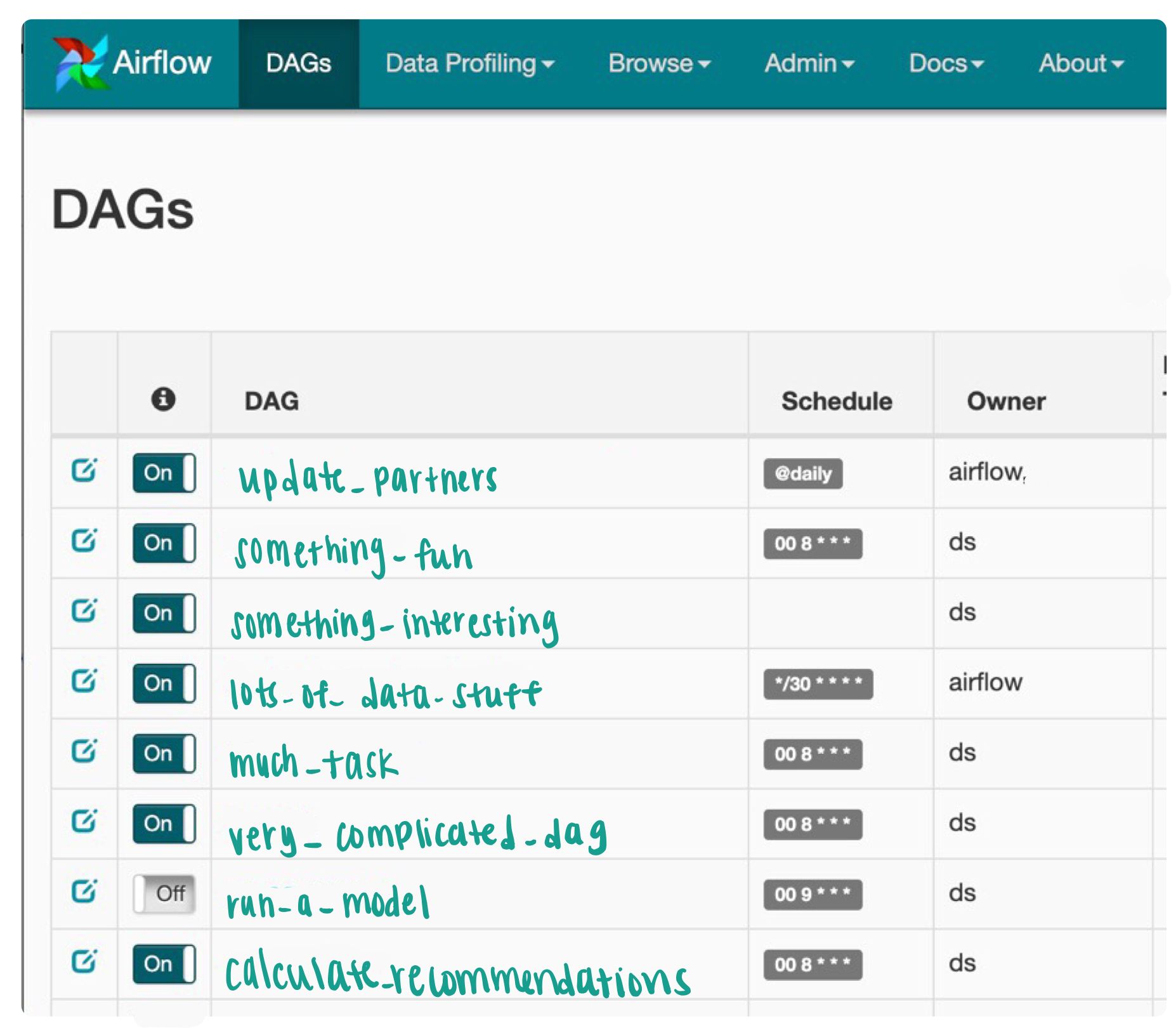

Because of its growing popularity, the Apache Software Foundation adopted the Airflow project.īy leveraging some standard Python framework features, such as data time format for task scheduling, Apache Airflow enables users to efficiently build scheduled Data Pipelines. Using a built-in web interface, they wrote and scheduled processes as well as monitored workflow execution. Airbnb founded Airflow in 2014 to address big data and complex Data Pipeline issues. Introduction to Apache Airflow Image SourceĪpache Airflow is an Open-Source Batch-Oriented Pipeline-building framework for developing and monitoring data workflows. Because data pipelines can be treated like any other piece of code, they can be integrated into a standard Software Development Lifecycle using source control, CI/CD, and Automated Testing.Īlthough DAGs are entirely Python code, effectively testing them necessitates taking into account their unique structure and relationship to other code and data in your environment. One of Apache Airflow’s guiding principles is that your DAGs are defined as Python code. This guide will go over a few different types of tests that we would recommend to anyone running Apache Airflow in production, such as DAG validation testing, unit testing, and data and pipeline integrity testing. In this article, you’ll learn more about Testing Airflow DAGs.

0 kommentar(er)

0 kommentar(er)